How To Access S3 Bucket From Hadoop . I am trying to connect amazon s3 bucket from hdfs using this command: The s3a connector is the recommended method for hadoop to interact with s3. — use s3a connector: — access amazon s3 bucket from hdfs. — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — there are multiple ways to connect to an s3 bucket. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private.

from www.vrogue.co

the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. I am trying to connect amazon s3 bucket from hdfs using this command: instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. — access amazon s3 bucket from hdfs. — use s3a connector: — there are multiple ways to connect to an s3 bucket. The s3a connector is the recommended method for hadoop to interact with s3. — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly.

Guide To Ftpsftp Access To An Amazon S3 Bucket Step B vrogue.co

How To Access S3 Bucket From Hadoop — access amazon s3 bucket from hdfs. — use s3a connector: — there are multiple ways to connect to an s3 bucket. — access amazon s3 bucket from hdfs. The s3a connector is the recommended method for hadoop to interact with s3. — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. I am trying to connect amazon s3 bucket from hdfs using this command: instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private.

From www.youtube.com

AWS S3 Bucket Permissions, Permissions S3 Bucket, Configuring an AWS How To Access S3 Bucket From Hadoop the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — use s3a connector: instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a. How To Access S3 Bucket From Hadoop.

From dxovqnqad.blob.core.windows.net

How To Access S3 Bucket From Redshift at Joyce Todd blog How To Access S3 Bucket From Hadoop I am trying to connect amazon s3 bucket from hdfs using this command: the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — use s3a connector: — there are multiple ways to connect to an s3 bucket. The s3a connector is the. How To Access S3 Bucket From Hadoop.

From www.youtube.com

How to get AWS access key and secret key id for s3 YouTube How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. — there are multiple ways to connect to an s3 bucket. I am trying to connect amazon s3 bucket from hdfs using this command: — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. instead of. How To Access S3 Bucket From Hadoop.

From www.youtube.com

Secure S3 by identifying buckets with "Public Access" YouTube How To Access S3 Bucket From Hadoop — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. The s3a connector is the recommended. How To Access S3 Bucket From Hadoop.

From www.exatosoftware.com

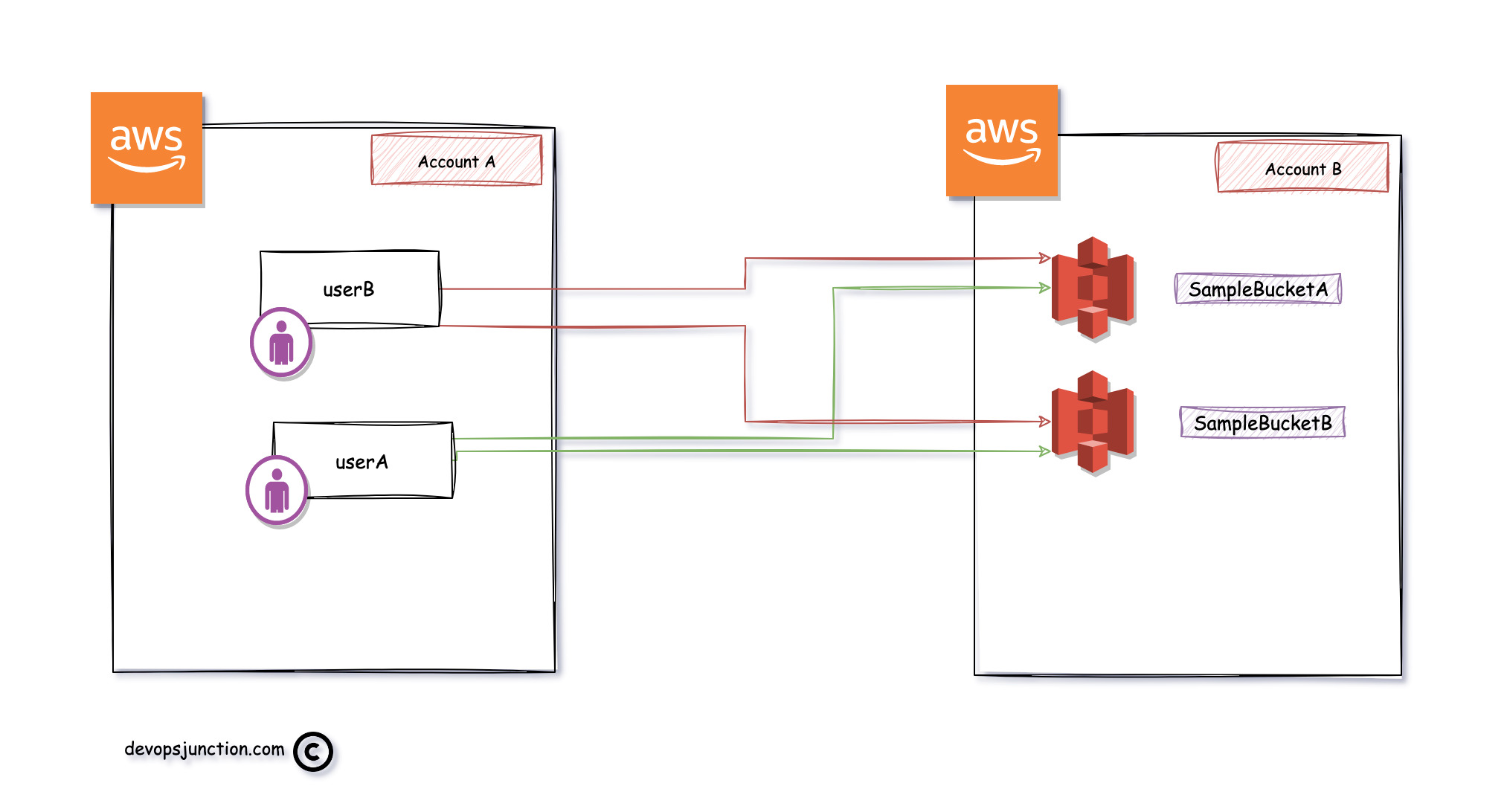

How to access S3 bucket from another account Exato Software How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. — access amazon s3 bucket from hdfs. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to. How To Access S3 Bucket From Hadoop.

From www.youtube.com

How to access s3 bucket from python? YouTube How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3. How To Access S3 Bucket From Hadoop.

From dxopymzkj.blob.core.windows.net

Access S3 Bucket From Aws Console at Estrella Sussman blog How To Access S3 Bucket From Hadoop the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. — access amazon s3 bucket from hdfs. The s3a connector is the recommended method for hadoop to interact with s3. — there are. How To Access S3 Bucket From Hadoop.

From dxovqnqad.blob.core.windows.net

How To Access S3 Bucket From Redshift at Joyce Todd blog How To Access S3 Bucket From Hadoop — access amazon s3 bucket from hdfs. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. I am trying to connect amazon s3 bucket from hdfs using this command: — with s3distcp, you can efficiently copy. How To Access S3 Bucket From Hadoop.

From xiaoxubeii.github.io

Hadoop/Spark on S3 Tim's Path How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — use s3a connector: — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop. How To Access S3 Bucket From Hadoop.

From exoqxxeue.blob.core.windows.net

How To Access S3 Bucket Through Cli at Norma Jackson blog How To Access S3 Bucket From Hadoop — use s3a connector: — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. The s3a connector is the recommended method for hadoop to interact with s3. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to. How To Access S3 Bucket From Hadoop.

From www.vrogue.co

Guide To Ftpsftp Access To An Amazon S3 Bucket Step B vrogue.co How To Access S3 Bucket From Hadoop the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. The s3a connector is the recommended. How To Access S3 Bucket From Hadoop.

From www.studypool.com

SOLUTION Create Roles and Access S3 bucket from EC2 Studypool How To Access S3 Bucket From Hadoop — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. the best practice is run hadoop on an instance. How To Access S3 Bucket From Hadoop.

From kodyaz.com

S3 Browser Tool for Accessing and Managing Amazon S3 Buckets How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. — there are multiple ways to connect to an s3 bucket. the best practice. How To Access S3 Bucket From Hadoop.

From dxoglsgfi.blob.core.windows.net

Php List Files In S3 Bucket at Cox blog How To Access S3 Bucket From Hadoop — with s3distcp, you can efficiently copy large amounts of data from amazon s3 into hadoop distributed filed. — use s3a connector: — there are multiple ways to connect to an s3 bucket. The s3a connector is the recommended method for hadoop to interact with s3. — access amazon s3 bucket from hdfs. — specifying. How To Access S3 Bucket From Hadoop.

From magecomp.com

How to Generate an Amazon S3 Bucket and Obtain AWS Access Key ID and How To Access S3 Bucket From Hadoop — access amazon s3 bucket from hdfs. instead of using the public internet or a proxy solution to migrate data, you can use aws privatelink for amazon s3 to migrate data to amazon s3 over a private. I am trying to connect amazon s3 bucket from hdfs using this command: — there are multiple ways to connect. How To Access S3 Bucket From Hadoop.

From www.vrogue.co

How To Mount S3 Bucket On Aws Ec2 Step By Step Vrogue How To Access S3 Bucket From Hadoop the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. I am trying to connect amazon s3 bucket from hdfs using this command: — specifying org.apache.hadoop.fs.s3a.anonymousawscredentialsprovider allows anonymous access to a publicly. — use s3a connector: The s3a connector is the recommended method. How To Access S3 Bucket From Hadoop.

From blog.webnersolutions.com

Access S3 buckets from EC2 instances ner Blogs eLearning How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. — access amazon s3 bucket from hdfs. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. I am trying to connect amazon s3 bucket from hdfs using this command: . How To Access S3 Bucket From Hadoop.

From www.youtube.com

How to access S3 Bucket from EC2 Instance using IAM Role Terraform How To Access S3 Bucket From Hadoop The s3a connector is the recommended method for hadoop to interact with s3. the best practice is run hadoop on an instance created with an ec2 instance profile role, and the s3 access is specified as a. I am trying to connect amazon s3 bucket from hdfs using this command: — access amazon s3 bucket from hdfs. . How To Access S3 Bucket From Hadoop.